Some time ago, I wrote about the interesting situation we had with emulation and Version 51 of the Chrome browser – that is, our emulations stopped working in a very strange way and many people came to the Archive’s inboxes asking what had broken. The resulting fix took a lot of effort and collaboration with groups and volunteers to track down, but it was successful and ever since, every version of Chrome has worked as expected.

But besides the interesting situation with this bug (it actually made us perfectly emulate a broken machine!), it also brought into a very sharp focus the hidden, fundamental aspect of Browsers that can easily be forgotten: Each browser is an opinion, a lens of design and construction that allows its user a very specific facet of how to address the Internet and the Web. And these lenses are something that can shift and turn on a dime, and change the nature of this online world in doing so.

An eternal debate rages on what the Web is “for” and how the Internet should function in providing information and connectivity. For the now-quite-embedded millions of users around the world who have only known a world with this Internet and WWW-provided landscape, the nature of existence centers around the interconnected world we have, and the browsers that we use to communicate with it.

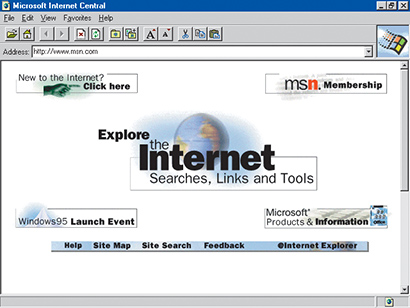

Avoiding too much of a history lesson at this point, let’s instead just say that when Browsers entered the landscape of computer usage in a big way after being one of several resource-intensive experimental programs. In circa 1995, the effect on computing experience and acceptance was unparalleled since the plastic-and-dreams home computer revolution of the 1980s. Suddenly, in one program came basically all the functions of what a computer might possibly do for an end user, all of it linked and described and seemingly infinite. The more technically-oriented among us can point out the gaps in the dream and the real-world efforts behind the scenes to make things do what they promised, of course. But the fundamental message was: Get a Browser, Get the Universe. Throughout the late 1990s, access came in the form of mailed CD-ROMs, or built-in packaging, or Internet Service Providers sending along the details on how to get your machine connected, and get that browser up and running.

As I’ve hinted at, though, this shellac of a browser interface was the rectangular window to a very deep, almost Brazil–like series of ad-hoc infrastructure, clumsily-cobbled standards and almost-standards, and ever-shifting priorities in what this whole “WWW” experience could even possibly be. It’s absolutely great, but it’s also been absolutely arbitrary.

With web anniversaries aplenty now coming into the news, it’ll be very easy to forget how utterly arbitrary a lot of what we think the “Web” is, happens to be.

There’s no question that commercial interests have driven a lot of browser features – the ability to transact financially, to ensure the prices or offers you are being shown, are of primary interest to vendors. Encryption, password protection, multi-factor authentication and so on are sometimes given lip service for private communications, but they’ve historically been presented for the store to ensure the cash register works. From the early days of a small padlock icon being shown locked or unlocked to indicate “safe”, to official “badges” or “certifications” being part of a webpage, the browsers have frequently shifted their character to promise commercial continuity. (The addition of “black box” code to browsers to satisfy the ability to stream entertainment is a subject for another time.)

Flowing from this same thinking has been the overriding need for design control, where the visual or interactive aspects of webpages are the same for everyone, no matter what browser they happen to be using. Since this was fundamentally impossible in the early days (different browsers have different “looks” no matter what), the solutions became more and more involved:

- Use very large image-based mapping to control every visual aspect

- Add a variety of specific binary “plugins” or “runtimes” by third parties

- Insist on adoption of a number of extra-web standards to control the look/action

- Demand all users use the same browser to access the site

Evidence of all these methods pop up across the years, with variant success.

Some of the more well-adopted methods include the Flash runtime for visuals and interactivity, and the use of Java plugins for running programs within the confines of the browser’s rectangle. Others, such as the wide use of Rich Text Format (.RTF) for reading documents, or the Realaudio/video plugins, gained followers or critics along the way, and were ultimately faded into obscurity.

And as for demanding all users use the same browser… well, that still happens, but not with the same panache as the old Netscape Now! buttons.

This puts the Internet Archive into a very interesting position.

With 20 years of the World Wide Web saved in the Wayback machine, and URLs by the billions, we’ve seen the moving targets move, and how fast they move. Where a site previously might be a simple set of documents and instructions that could be arranged however one might like, there are a whole family of sites with much more complicated inner workings than will be captured by any external party, in the same way you would capture a museum by photographing its paintings through a window from the courtyard.

When you visit the Wayback and pull up that old site and find things look differently, or are rendered oddly, that’s a lot of what’s going on: weird internal requirements, experimental programming, or tricks and traps that only worked in one brand of browser and one version of that browser from 1998. The lens shifted; the mirror has cracked since then.

This is a lot of philosophy and stray thoughts, but what am I bringing this up for?

The browsers that we use today, the Firefoxes and the Chromes and the Edges and the Braves and the mobile white-label affairs, are ever-shifting in their own right, more than ever before, and should be recognized as such.

It was inevitable that constant-update paradigms would become dominant on the Web: you start a program and it does something and suddenly you’re using version 54.01 instead of version 53.85. If you’re lucky, there might be a “changes” list, but that luck might be variant because many simply write “bug fixes”. In these updates are the closing of serious performance or security issues – and as someone who knows the days when you might have to mail in for a floppy disk to be sent in a few weeks to make your program work, I can totally get behind the new “we fixed it before you knew it was broken” world we live in. Everything does this: phones, game consoles, laptops, even routers and medical equipment.

But along with this shifting of versions comes the occasional fundamental change in what browsers do, along with making some aspect of the Web obsolete in a very hard-lined way.

Take, for example, Gopher, a (for lack of an easier description) proto-web that allowed machines to be “browsed” for information that would be easy for users to find. The ability to search, to grab files or writings, and to share your own pools of knowledge were all part of the “Gopherspace”. It was also rather non-graphical by nature and technically oriented at the time, and the graphical “WWW” utterly flattened it when the time came.

But since Gopher had been a not-insignificant part of the Internet when web browsers were new, many of them would wrap in support for Gopher as an option. You’d use the gopher:// URI, and much like the ftp:// or file:// URIs, it co-existed with http:// as a method for reaching the world.

Until it didn’t.

Microsoft, citing security concerns, dropped Gopher support out of its Internet Explorer browser in 2002. Mozilla, after a years-long debate, did so in 2010. Here’s the Mozilla Firefox debate that raged over Gopher Protocol removal. The functionality was later brought back externally in the form of a Gopher plugin. Chrome never had Gopher support. (Many other browsers have Gopher support, even today, but they have very, very small audiences.)

The Archive has an assembled collection of Gopherspace material here. From this material, as well as other sources, there are web-enabled versions of Gopherspace (basically, http:// versions of the gopher:// experience) that bring back some aspects of Gopher, if only to allow for a nostalgic stroll. But nobody would dream of making something brand new in that protocol, except to prove a point or for the technical exercise. The lens has refocused.

In the present, Flash is beginning a slow, harsh exile into the web pages of history – browser support dropping, and even Adobe whittling away support and upkeep of all of Flash’s forward-facing projects. Flash was a very big deal in its heyday – animation, menu interface, games, and a whole other host of what we think of as “The Web” depended utterly on Flash, and even specific versions and variations of Flash. As the sun sets on this technology, attempts to be able to still view it like the Shumway project will hopefully allow the lens a few more years to be capable of seeing this body of work.

As we move forward in this business of “saving the web”, we’re going to experience “save the browsers”, “save the network”, and “save the experience” as well. Browsers themselves drop or add entire components or functions, and being able to touch older material becomes successively more difficult, especially when you might have to use an older browser with security issues. Our in-browser emulation might be a solution, or special “filters” on the Wayback for seeing items as they were back then, but it’s not an easy task at all – and it’s a lot of effort to see information that is just a decade or two old. It’s going to be very, very difficult.

But maybe recognizing these browsers for what they are, and coming up with ways to keep these lenses polished and flexible, is a good way to start.

Have you ever clicked on a web link only to get the dreaded “404 Document not found” (dead page) message? Have you wanted to see what that page looked like when it was alive? Well, now you’re in luck.

Have you ever clicked on a web link only to get the dreaded “404 Document not found” (dead page) message? Have you wanted to see what that page looked like when it was alive? Well, now you’re in luck.

The Internet Archive has been making print materials more accessible to the blind and print disabled for years, but now with Canada’s joining the

The Internet Archive has been making print materials more accessible to the blind and print disabled for years, but now with Canada’s joining the

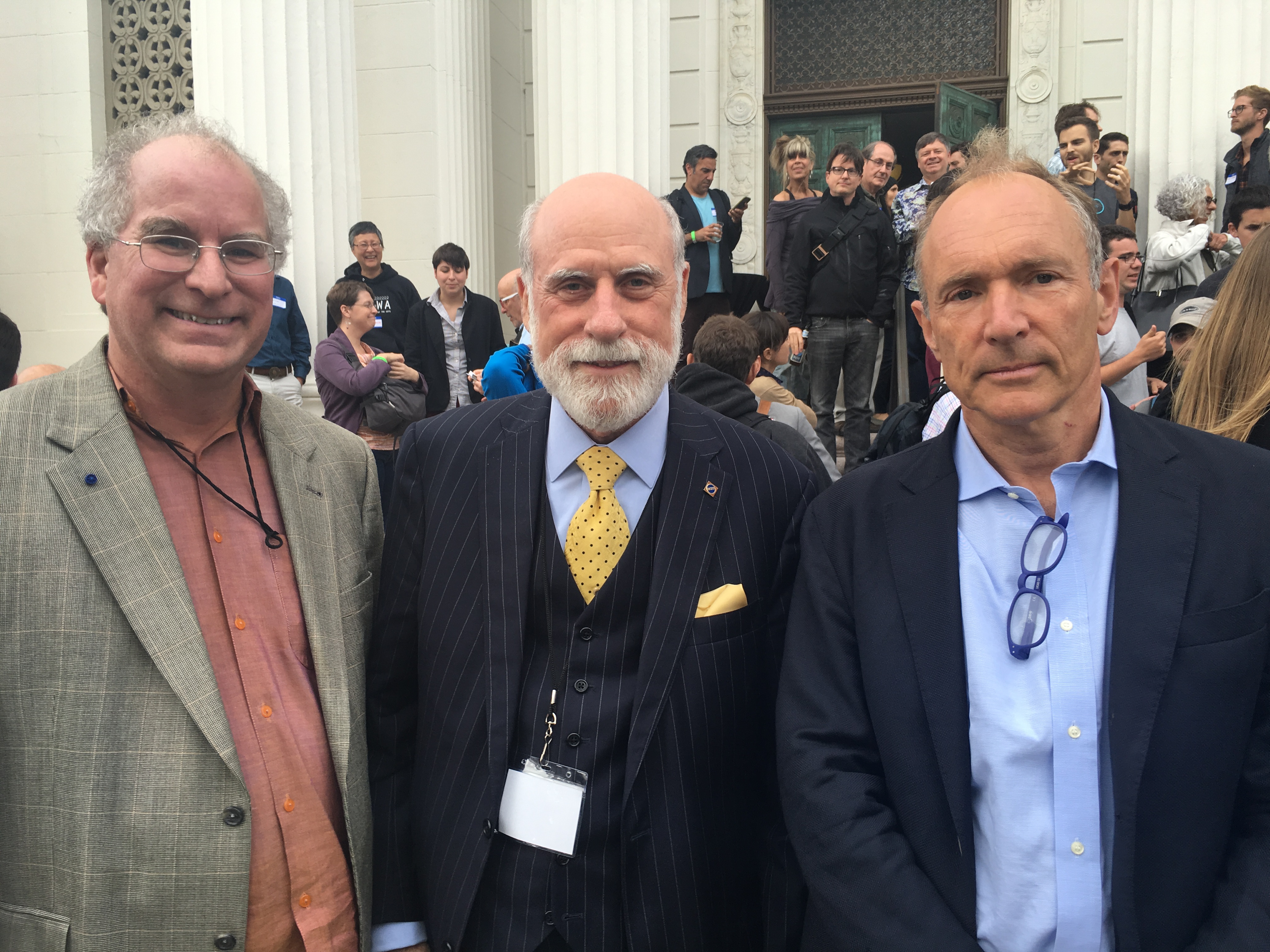

First in

First in  Next, Vint Cerf, Google’s Internet Evangelist and “father of the Internet,”

Next, Vint Cerf, Google’s Internet Evangelist and “father of the Internet,”  volve to incorporate what we want from of our Web. He told us he created the

volve to incorporate what we want from of our Web. He told us he created the

“Polyfill” was final bit of advice I got from Tim Berners-Lee before he left.

“Polyfill” was final bit of advice I got from Tim Berners-Lee before he left.

In May, the US Copyright Office came to San Francisco to hear from various stakeholders about how well Section 512 of the Digital Millennium Copyright Act or DMCA is working. The Internet Archive appeared at these hearings to talk about the perspective of nonprofit libraries. The DMCA is the part of copyright law that provides for a “notice and takedown” process for copyrighted works on the Internet. Platforms who host content can get legal immunity if they take down materials when they get a complaint from the copyright owner.

In May, the US Copyright Office came to San Francisco to hear from various stakeholders about how well Section 512 of the Digital Millennium Copyright Act or DMCA is working. The Internet Archive appeared at these hearings to talk about the perspective of nonprofit libraries. The DMCA is the part of copyright law that provides for a “notice and takedown” process for copyrighted works on the Internet. Platforms who host content can get legal immunity if they take down materials when they get a complaint from the copyright owner.